Six researchers at Apple have recently published a groundbreaking paper revealing that Large Language Models (LLMs) lack true formal reasoning capabilities. The study argues that LLMs primarily rely on sophisticated probabilistic pattern-matching rather than genuine logical reasoning.

While LLMs have made significant strides in performance, their reasoning abilities have not kept pace. The paper is particularly important as it raises concerns about the reliability of reported performance metrics.

In an era of heightened excitement around LLMs, exaggerated claims often mislead the public and skew perceptions. Understanding the true strengths and limitations of LLMs is crucial for advancing the field responsibly, enabling researchers to target improvements where these models still fall short while fostering realistic expectations about their capabilities.

Let us deep dive into the topic and the article “GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models”.

What is Reasoning?

Reasoning is a cognitive process that enables drawing conclusions, making predictions, and inferring new facts by integrating existing knowledge with newly acquired information.

Reasoning is a crucial human capability that, when transferred to software programs enables them to think rationally, akin to the human brain, and effectively mimic human behavior.

Formal or mathematical reasoning is the software tooling that enables computers to think rationally. It allows them to derive conclusions using strict, well-defined rules of logic and mathematical structures, such as axioms, theorems, and proofs.It involves following systematic steps where each inference must follow logically from prior steps or established mathematical truths.

In AI formal or mathematical reasoning is essential so that programs can think rationally, similarly to a human brain, and mimic human behaviour.

Some examples of reasoning include:

Deductive Reasoning: Drawing specific conclusions from general principles.

>>>> If all humans are mortal (Premise 1) and Socrates is a human (Premise 2), then Socrates is mortal (Conclusion).

Inductive Reasoning: Generalizing from specific observations.

>>>> After observing that the sun has risen in the east every morning, one might conclude that the sun always rises in the east.

Abductive Reasoning: Inferring the most likely explanation from available evidence.

>>>> If a person walks into a room and finds the floor wet, they might conclude that it has rained recently or that someone spilled water.

Complex Multi-Step Reasoning.

Mathematical Problem-Solving: Taking multiple steps to arrive at the solution.

To solve the equation (2x + 3 = 11), one first subtracts 3 from both sides (resulting in (2x = 8)), then divides by 2 (resulting in (x = 4)).

Planning a Trip: Carefully considering each step and dependencies to arrive at a comprehensive plan.

To plan a trip, one might start by deciding the destination, then researching transportation options, comparing costs, and finally booking accommodations.

So, what is Formal or Mathematical Reasoning?

Formal reasoning is a mathematical discipline, rooted in logics, that lays the groundwork for enabling computers to think rationally. It equips them to address a wide range of reasoning problems, including those previously mentioned, as well as many others. This process allows computers to draw conclusions based on strict, well-defined rules of logic and mathematical structures, such as axioms, theorems, and proofs. They follow systematic steps, ensuring that each inference logically follows from prior steps or established mathematical truths.

Why is Formal Reasoning Important?

Because it is essential for advancing AI and its real-world applications.

Formal reasoning plays a vital role in problem-solving across various scientific and practical fields. This cognitive skill enables programs to think rationally, much like the human brain, effectively mimicking human behavior and addressing complex problems.

Logical reasoning allows LLMs to grasp concepts more deeply, unlocking new capabilities for tasks such as scientific discovery and advanced problem-solving.

It is crucial for tackling complex, multi-step problems, reducing hallucinations, and improving overall reliability and effectiveness. Ultimately, enhancing logical reasoning will bolster trust in LLMs, ensuring they deliver value in mission-critical applications like healthcare.

What are the Findings of the Paper?

The paper has found out that:

1) LLMs are not capable of genuine logical reasoning;

2) the reasoning process in LLMs is probabilistic pattern-matching rather than formal reasoning;

3) LLMs attempt to replicate reasoning, by copying the steps observed in their training data, without real understanding of what they are actually doing.

Because the reasoning is not formal, conclusions are not deterministic and susceptible to changes caused by different verbalisations of prompts. The study found that adding language to the original prompt resulted in decreased performance, irrespectively of whether it added or not to the context of the problem.

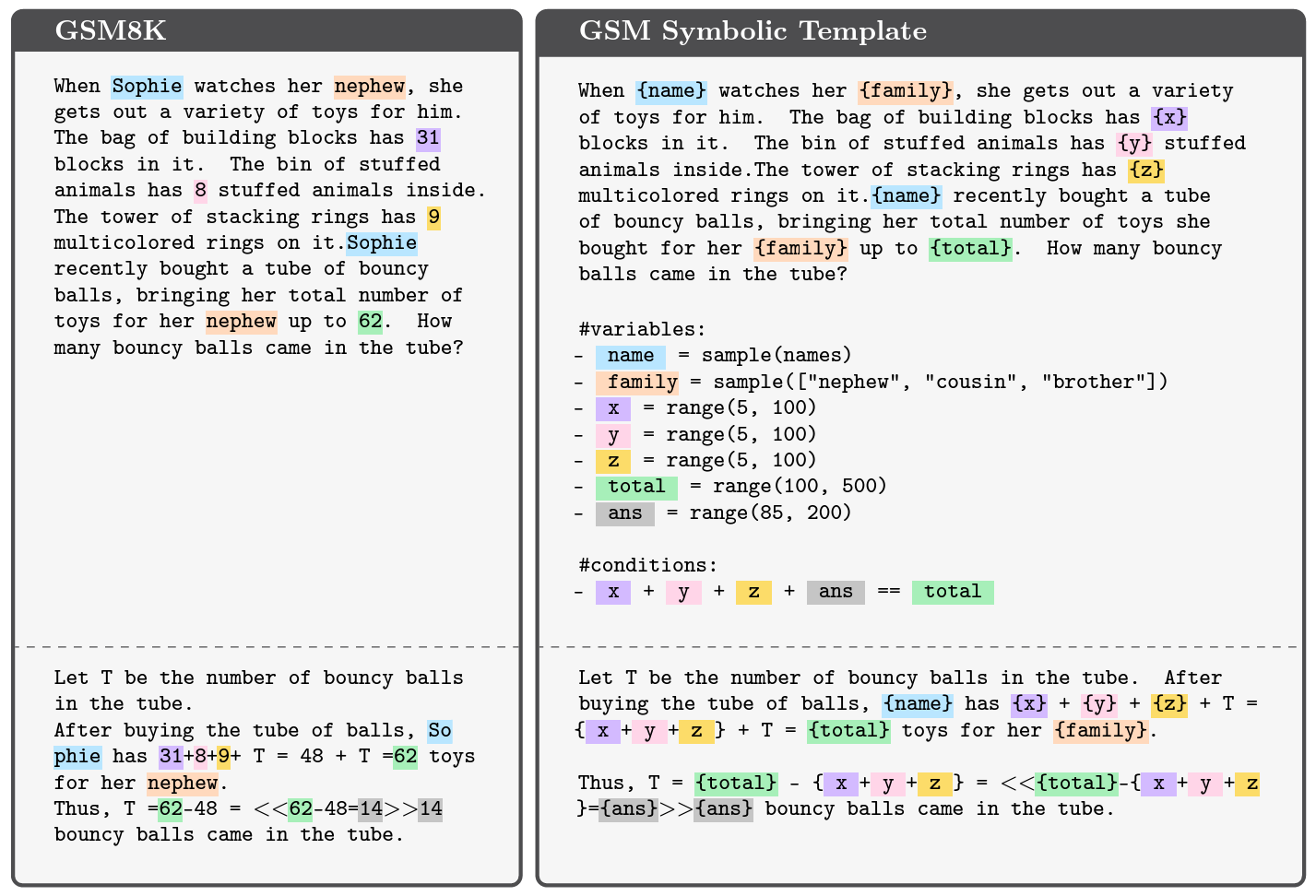

Image – An example from the GSM-NoOp dataset: We add seemingly relevant statements to the questions that are, in fact, irrelevant to the reasoning and conclusion. However, the majority of models fail to ignore these statements and blindly convert them into operations, leading to mistakes.

The study also noted that the reasoning performance remained closer to expectation when only names where changed, however, it dropped substantially when values were altered.

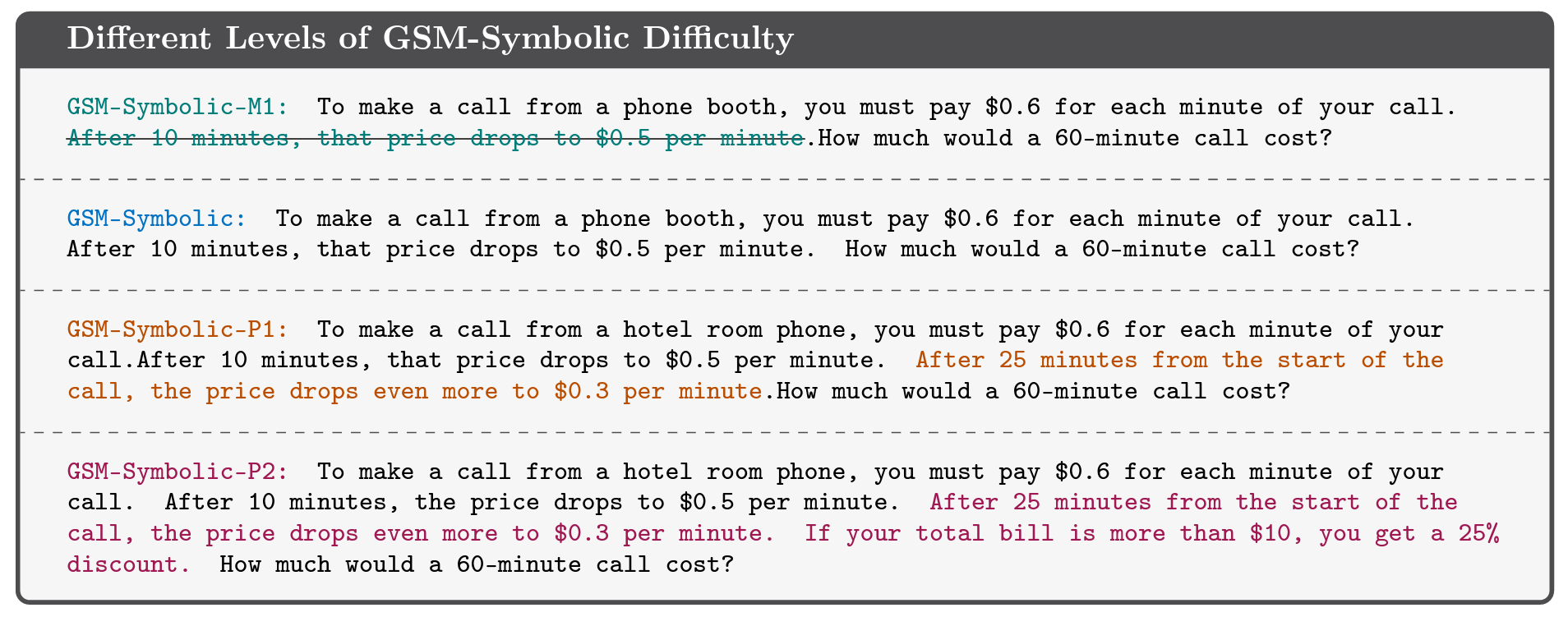

Image – Modifying the difficulty level of GSM-Symbolic by modifying the number of clauses.

The findings are very important, since they challenge some marketing-driven studies that highlight LLMs’ impressive reasoning capabilities. It helps explains why different verbalizations of the same question leads to such large variance of answers.

If LLMs do not Reason, what do they do?

It is called Analogical Reasoning.

It is not really reasoning in the formal way Computer Science defined it, but rather a sophisticated form probabilistic pattern-matching where conclusions are reached based on the similarities of the things being compared. A sort of macro that performs search, copy and paste.

More on Analogical Reasoning on the next post.

Credits: GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models. Iman Mirzadeh, Keivan Alizadeh, Hooman Shahrokhi, Oncel Tuzel Samy Bengio, Mehrdad Farajtabar. Apple.